Enhancing HFC networks by applying neural design principles

The cable industry’s reputation for sophisticated technology sits in parallel to perceptions that it’s a slow-moving area. With continuous demand for services that offer improved latency, greater bandwidth, less power consumption, and minimal cost, operators are driven by an appetite for solutions that keep up with consumer and market demands.

As the pace of cable’s agility faces increased challenges from the speed at which these demands can change and evolve, we want to know if there’s anything to learn from the basics of AI?

This blog considers novel ways to elevate hybrid fiber coaxial (HFC) plants by comparing and contrasting them with emergent, so-called ‘neural networks’. How possible is it to apply the principles of newer, automated technologies?

Neural networks: the basics

Often associated with AI, neural networks are mathematical descriptions of nodes and their connections. These descriptions are loosely based on neurons and their connections within the human brain.

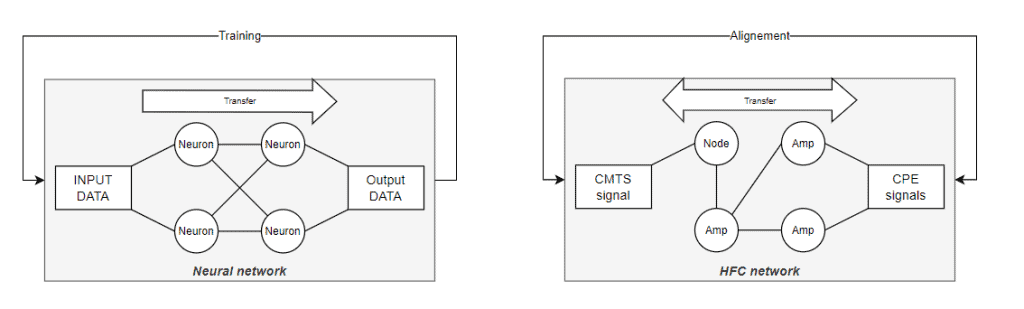

The first step in developing a neural network is meticulous design: the structure is defined, along with the number and order of nodes and connections required.

The network is then ‘trained’. As data is fed into the network, the output is extracted to measure and closely monitor task performance. So that the network can ‘learn’ the correlation between input and intended output, changes to the transfer function can be made between nodes.

Once design is complete and the network performs well at generating predictions and attributing input to output data, it is ready to be deployed. At this point, the ‘training’ stops and is only used to calculate output from input data.

HFC networks: the basics

These physical networks are a combination of coaxial cable and fiber optic infrastructure, consisting of amplifiers, nodes, taps, and connector components. In much the same way as the neural network begins, HFC structures are designed: appropriate cable plan is plotted out, followed by identifying the number and hierarchy of amplifiers and nodes for optimized function. Considerations such as homes passed, terrain features, and pre-existing network and cable mapping are taken into account to ensure the design accommodates need.

Technicians are then deployed into the field to start the alignment process and device setup. Test signals fed into the network are captured and measured at the subscriber end through cable modem termination systems (CMTS) and customer premise equipment (CPE). This signal performance monitoring at both ends enables technicians to adjust amplifier settings as required.

Once alignment is complete, the network is put into service to transfer signals from the CPE to the CMTS, and vice-versa from the CMTS to the CPE.

The neural model’s design-train-deploy process provides a reasonably analogous methodology to that of HFC’s design-align-deploy trifecta. Furthermore, the objective of both can be expressed in a similar fashion: passing data/signals through the network to generate output with specific characteristics.

Neural v HFC: the differences

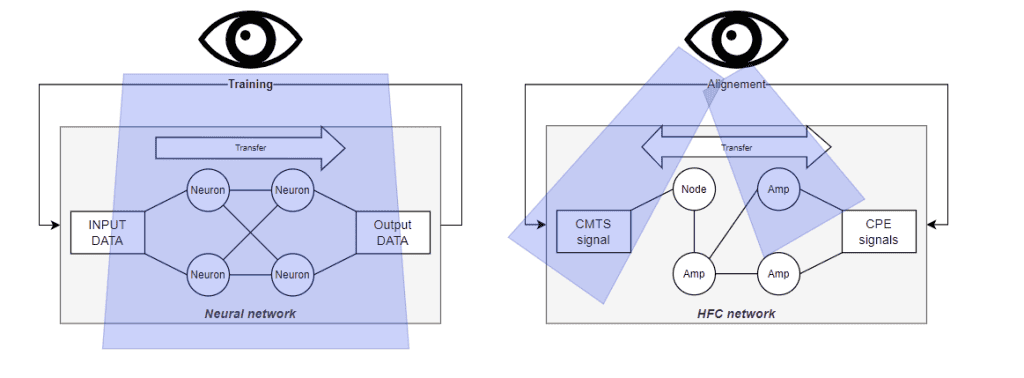

Significantly, the alignment and deployment of HFC plants is fairly labor-intensive at each stage: a hands-on process performed on-site involving technician visits to each amplifier location. In contrast, ‘training’ a neural network is more automatic, with the entire setup active during the computationally driven process.

Keeping these comparisons and differences in mind, it is possible to apply the logic of neural networks to traditional HFC plants. By enabling technicians a data capture of the entire neural setup, adjustments are applied network-wide, simultaneously, as it ‘learns’ and maps input and output data. This gives effect to a fast and efficient ‘training’ stage.

Traditionally, HFC alignment – the equivalent to ‘training’ – entails visits to each amplifier location, one by one. While this time-consuming process previously fulfilled the need to establish adequate signal to and from the home, it cannot account for the network as a whole during the alignment process. – and with increasing demand for increased bandwidth, improved latency, and powering efficiency, today’s standards are higher and must be executed faster.

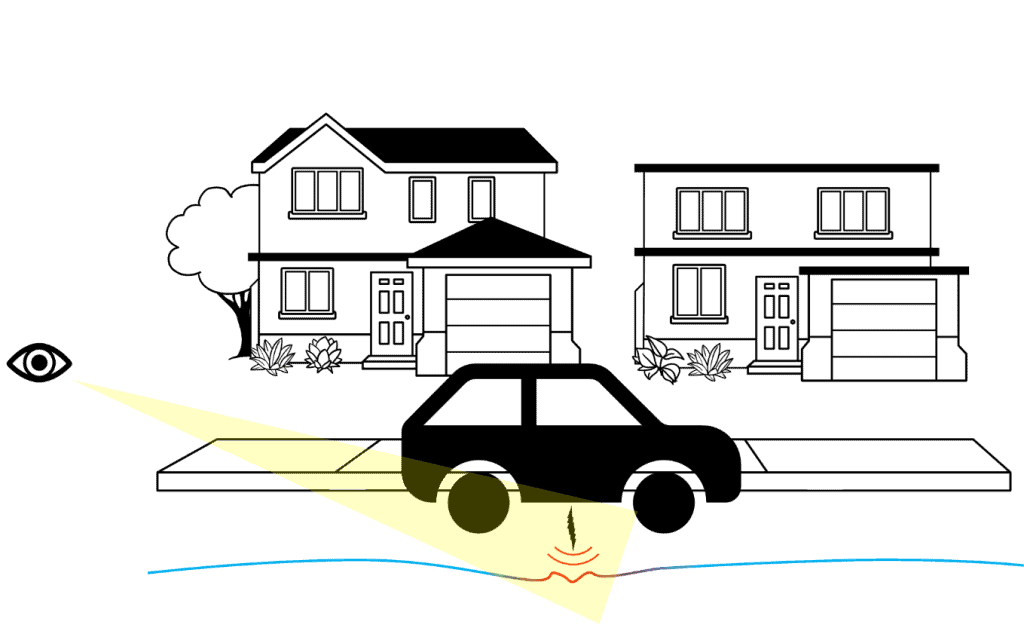

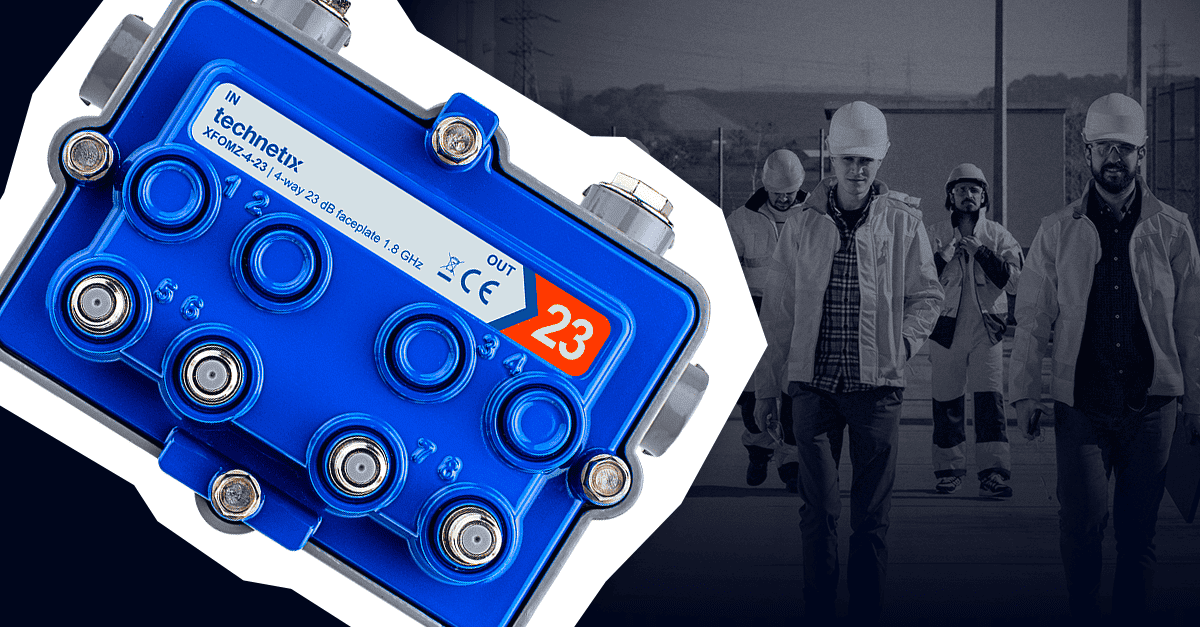

To optimize what’s possible from legacy HFC plants, Technetix have fully embraced the power of AI in the development of ‘smart amplifiers’, cost-effective transponders, and innovative algorithms. Collecting telemetry from amplifiers provides a complete picture of the network, and leveraging algorithms based on neural networks greatly improves the ability to achieve downstream alignment, enables better fault and noise detection, and optimizes the upstream path.

NeuronX

Technetix’ overarching network optimization system, NeuronX, incorporates data polling using in-amplifier transponders, and data processing to extract meaningful insights from collected data. At its backend, the technology consists of a data polling and processing engine, and a machine learning model. At its frontend, readings are displayed on a user interface (UI) dashboard UI.

NeuronX combines network element control, optimized bandwidth and power consumption, and instructs amplifiers, nodes, CMTS and modems with new configurations and settings commands. To detect and communicate anomalies, proactive network maintenance (PNM) systems, such as OpenVault, use software to process telemetry data from the modem, CMTS and/or amplifier. More targeted settings can also be changed using these tools, such as proactive management and assurance (PMA) systems that adjust channel profile modulation.

This capacity to optimize output and performance using active control to adjust the configuration of all its network elements is what gives NeuronX an edge. It greatly expands what can be done with PMN and PMA.

Fiber optic networks: why?

Optical networks benefit from their capacity to carry large volumes of information at high speeds, with less signal loss. Unlike traditional copper cable networks which rely on electrical signals, fiber is significantly more efficient in the transmission of data over long distances.

Fiber networks transmit light through glass filaments. These are smaller in diameter than a human hair. Light is guided within the fiber by total internal reflection, ensuring that the signal remains clear and strong, even when traveling over very long distances.

In addition to these main characteristics, fiber optic networks are distinguished by:

Supporting data transmission at much higher speeds than copper-based networks. With capacities that can exceed a terabit per second, they are ideal for high-demand applications such as high-definition video streaming, video conferencing, data centers, high-speed internet connections, and 5G network infrastructure.

Signals suffer less from attenuation and distortion. Compared to copper cables, fiber provides more stable and higher-quality connections removing the need to continuously amplify signals for transmission over long distances.

Optic fiber is not affected by electromagnetic interference. This provides improved signal quality and reliability compared to copper wires, which are susceptible to interference.

Signals are difficult to intercept without interrupting transmission. This makes fiber optic networks much more secure than copper cable plants.

Optical communications powered by AI

With cloud computing driving increased bandwidth requirements, the likes of new generation wireless connections (5G, or Wi-Fi 7), IoT or the growing use of AI itself compels us to consider the newest technologies around fiber optic networks.

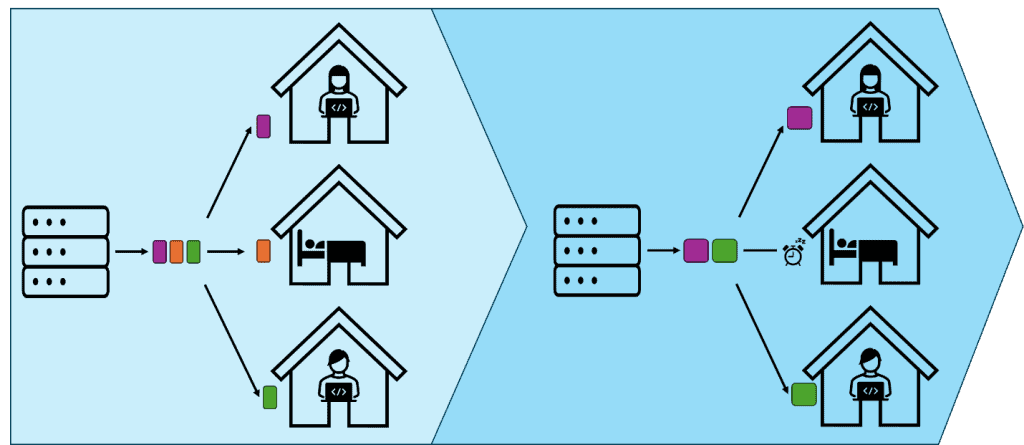

While they’ve traditionally been managed through manual processes, this method of monitoring and optimization becomes less realistic as the scale of these networks grows.

Thanks to the mechanical properties of fiber optics and its imperviousness to any influence from electromagnetic fields, AI’s analyses of optical signals can predict potential mechanical failures and detect issues at their origin. This capacity can then be used to quickly classify the nature of issues to action preventative measures before major problems occur. This provides more efficient maintenance and repairs.

Traffic with large volumes of data transfer also presents an important aspect of network management/ Incorporating more services or improving network quality in combination with increasing data volumes can lead to network saturation. AI helps improve existing systems to redirect traffic more efficiently, not only calculating the minimum distance to reach its destination but also adding context in terms of network status and node congestion. All this helps achieve more efficient networks, avoiding bottlenecks, lowering latency and improving service.

Because of this technology’s evolution and continuous rediscovery around its own capability, Technetix are certain to continue exploring new areas for the improvement of our products. The result will simplify our equipment and offer enhanced reliability and efficiency.

Co-author – Chris Beem, CTO Engineer

Chris is based at Technetix’ CTO department in The Netherlands, and has been a key member of the team there for six years. He specializes in AI and how the broadband industry can apply it for optimizing the performance and efficiencies of outside plants. His research in this area gave rise to NeuronX, Technetix’ AI-powered network project which Chris leads.

Co-author – Santiago Lazaro, Sales Engineer, Europe

Based at Technetix’ operation in Zaragoza, Spain, Santiago is a telecommunications engineer and joined the European SE team in April 2024. With a background in developing guidance for LLM improvements, and work on multidisciplinary systems to harmonize software deployments with comms systems hardware, his specialist knowledge of neural networks is driving Technetix’ research into its AI-powered offering.